Document Management RAG

A production-ready, full-stack Retrieval-Augmented Generation (RAG) system that runs entirely on free-tier cloud services. Upload documents, perform semantic search, and chat with an LLM using your own data.

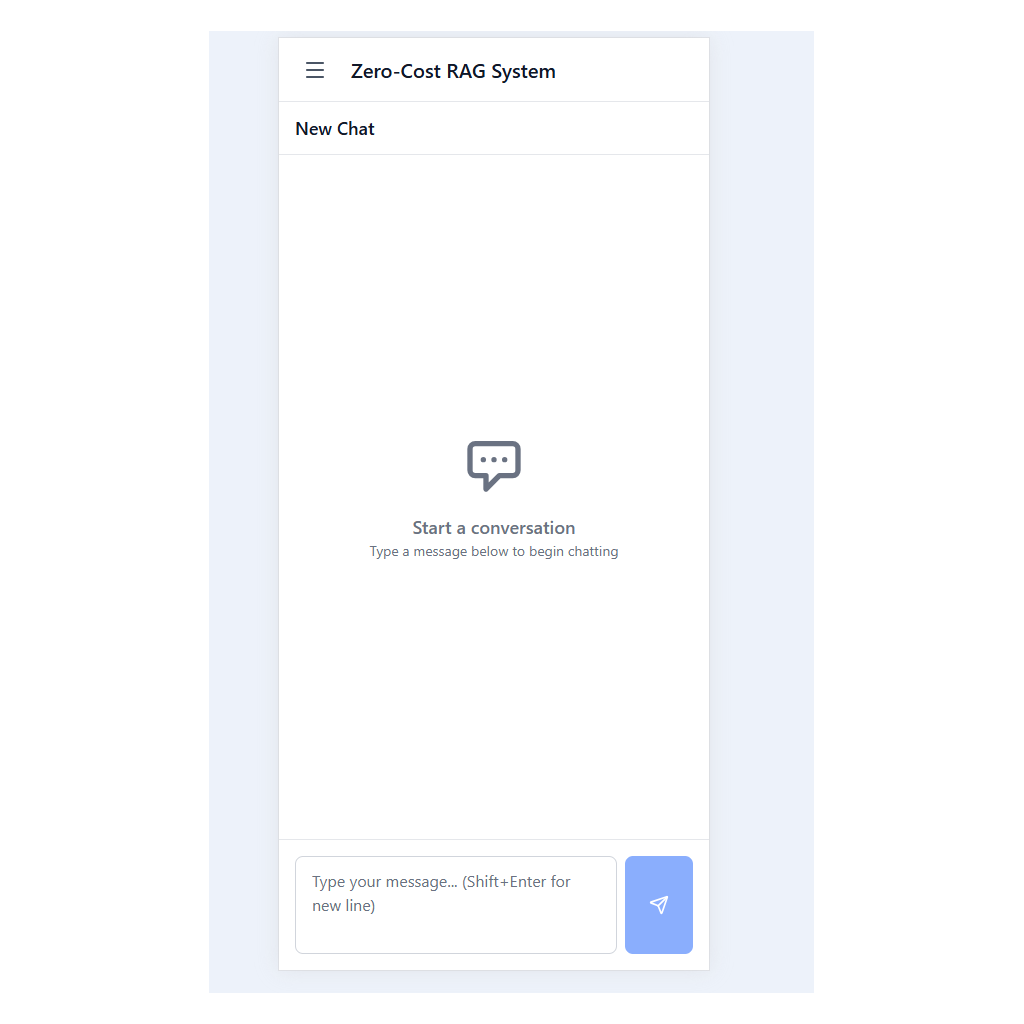

Document Management RAG is a modern, open-source RAG platform that enables users to upload documents (PDF, DOCX, TXT), perform semantic search, and interact with an LLM using the context of their own files. The backend is built with FastAPI, integrates Hugging Face for LLM and embeddings, and uses Qdrant for vector search. The frontend is a React + TypeScript SPA with a ChatGPT-like interface, real-time streaming, and document upload. The entire stack is designed for zero-cost deployment using free-tier services like Render, Qdrant Cloud, and Hugging Face Inference API.

- ✓Async FastAPI backend with SSE streaming

- ✓Hugging Face Inference API for LLM and embeddings

- ✓Qdrant vector database for semantic search

- ✓JWT authentication (configurable)

- ✓Document upload and chunking (PDF, DOCX, TXT)

- ✓Modern React + TypeScript frontend with ChatGPT-like UI

- ✓Real-time streaming chat responses

- ✓Dark mode and responsive design

- ✓Dockerized for local and cloud deployment

Technical Deep Dive

Staying within free-tier API quotas for Hugging Face and Qdrant

Solution

Optimized API usage and implemented rate limiting to avoid quota overruns

Implementation

SSE Streaming Endpoint (FastAPI)

from fastapi.responses import EventSourceResponse

@app.post('/api/chat/')

async def chat_endpoint(request: ChatRequest):

async def event_generator():

async for chunk in llm_service.stream_response(request):

yield {'event': 'message', 'data': chunk}

return EventSourceResponse(event_generator())Efficiently chunking and indexing documents for semantic search

Solution

Used Qdrant for fast vector search and chunked text for context retrieval

Implementation

Frontend SSE Streaming Hook

const useSSE = (url: string, onMessage: (data: any) => void) => {

useEffect(() => {

const es = new EventSource(url);

es.onmessage = (event) => onMessage(JSON.parse(event.data));

return () => es.close();

}, [url]);

};Providing real-time streaming responses in the chat UI

Solution

Implemented SSE endpoints in FastAPI and streaming UI in React